Meta Unveils Llama 3.1: The Most Powerful Open-Source AI Model

Discover Meta's groundbreaking Llama 3.1, the largest and most advanced open-source AI model. Explore its innovative architecture, extensive evaluations, and commitment to openness.

Meta has introduced Llama 3.1, the most advanced open-source AI model to date. This release represents a significant milestone in the field of artificial intelligence, setting new benchmarks for performance, scalability, and openness. Llama 3.1 is not just a testament to Meta's cutting-edge research and development but also a beacon of innovation for the broader AI community.

Setting New Standards in AI Performance

Llama 3.1 has undergone extensive evaluations across more than 150 benchmark datasets, covering a wide array of languages and tasks. These benchmarks, coupled with rigorous human evaluations, demonstrate that Llama 3.1 competes head-to-head with industry-leading models such as GPT-4, GPT-4o, and Claude 3.5 Sonnet. The results are clear: Llama 3.1 not only matches but often surpasses its rivals in real-world applications.

Revolutionary Model Architecture

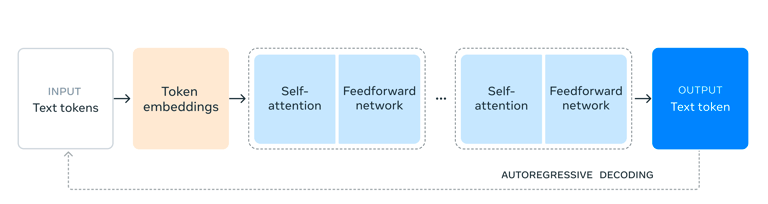

At the heart of Llama 3.1 is a meticulously optimized training stack that enabled the training of the 405 billion parameter model on over 15 trillion tokens. Utilizing over 16,000 H100 GPUs, Llama 3.1 marks a significant leap in scale and capability. The model's architecture—a standard decoder-only transformer with strategic adaptations—ensures robust training stability and scalability. This approach, combined with iterative post-training procedures, has resulted in a model that excels in both performance and reliability.

To achieve this, Meta made design choices that focus on scalability and simplicity. The use of a standard decoder-only transformer model, rather than a mixture-of-experts model, maximizes training stability. An iterative post-training procedure that includes supervised fine-tuning and direct preference optimization has been adopted. This method allows for the creation of high-quality synthetic data in each round, enhancing the model's capabilities with each iteration.

Evaluation

Meta assessed the model across over 150 benchmark datasets, covering a diverse range of languages. This extensive evaluation process didn't stop at automated tests; Meta also conducted thorough human evaluations to compare Llama 3.1 against leading models in real-world scenarios.

The results are impressive. Llama 3.1 proved to be competitive with top foundation models like GPT-4, GPT-4o, and Claude 3.5 Sonnet across a variety of tasks. Even Meta's smaller models, trained with a similar number of parameters, held their own against both closed and open models in the market. This demonstrates the robustness and versatility of Llama 3.1, ensuring it can meet diverse AI application needs.

Advanced Fine-Tuning Techniques

Llama 3.1's fine-tuning process has been refined to enhance the model's ability to follow detailed instructions and provide high-quality responses. The post-training involves multiple rounds of Supervised Fine-Tuning (SFT), Rejection Sampling (RS), and Direct Preference Optimization (DPO). These processes, supported by high-quality synthetic data generation and rigorous filtering, ensure that Llama 3.1 maintains exceptional performance across all capabilities, even with an expanded 128K context window.

Integration with the Llama Ecosystem

Meta's vision for Llama models extends beyond individual capabilities to a broader system integration. Llama 3.1 is designed to work seamlessly with external tools, offering developers a flexible platform to create custom applications. The release includes new components like Llama Guard 3, a multilingual safety model, and Prompt Guard, a prompt injection filter. These tools, along with sample applications, are open source, encouraging community collaboration and innovation.

Commitment to Open Source

A defining feature of Llama 3.1 is its open-source nature. Meta provides access to model weights, allowing developers to fully customize and extend the model for their unique needs. This openness fosters a vibrant ecosystem of innovation, where developers can experiment, iterate, and build upon the foundational work of Meta. The commitment to open source ensures that the benefits of AI are accessible to a wider audience, promoting equity and diversity in AI development.

Practical Applications and Developer Support

Recognizing the challenges of working with a model of this scale, Meta has equipped developers with tools and resources to maximize the potential of Llama 3.1 405B. From real-time and batch inference to synthetic data generation and retrieval-augmented generation (RAG), the Llama ecosystem provides comprehensive support. Partnerships with industry leaders like AWS, NVIDIA, and Databricks ensure that developers have access to optimized solutions for both cloud and on-prem deployments.

Conclusion

Llama 3.1 is a groundbreaking advancement in the AI landscape, combining unparalleled performance, innovative architecture, and a steadfast commitment to openness. Meta's release of Llama 3.1 not only pushes the boundaries of what AI models can achieve but also democratizes access to cutting-edge technology. As developers and researchers around the world begin to explore the possibilities of Llama 3.1, we can expect a new wave of innovation that will shape the future of artificial intelligence.