An In-Depth Review of Google Gemma 2 2B

Gemma 2 2B models are lightweight, state-of-the-art text-to-text AI from Google. Ideal for various tasks, they offer open weights and can be deployed on limited resources..

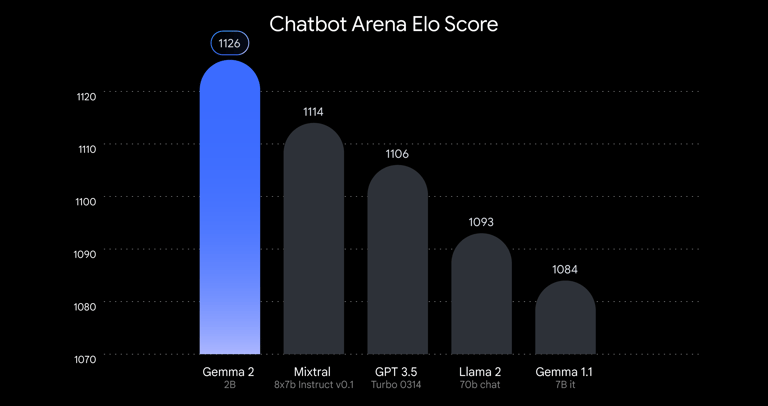

Google has just Announced its latest AI model, Gemma 2 2B, a lightweight yet powerful AI model that has reportedly outperformed larger models like GPT-3.5 and Mistral 8x7B on key benchmarks. This release comes only weeks after Google introduced the best-in-class Gemma 2 models, further solidifying its commitment to pushing the boundaries of AI technology.

Gemma 2 2B is not just a smaller model; it is packed with advanced safety features that set it apart from its predecessors. Designed to minimize biases and ensure ethical AI usage, Gemma 2 2B represents a significant leap forward in responsible AI development.

In addition to Gemma 2 2B, Google has also announced two new innovative tools:

ShieldGemma and Gemma Scope.

ShieldGemma focuses on enhancing security measures, providing robust protection against potential threats. Meanwhile,

Gemma Scope offers unparalleled transparency, enabling users to gain deeper insights into the AI’s decision-making processes.

Gemma 2 2B model, a highly anticipated addition to the Gemma 2 family. This lightweight model produces outsized results by learning from larger models through distillation. In fact, Gemma 2 2B surpasses all GPT-3.5 models on the Chatbot Arena, demonstrating its exceptional conversational AI abilities.

Gemma 2 2B Offers:

Exceptional Performance:

Gemma 2 2B delivers best-in-class performance for its size, outperforming other open models in its category. This model excels in various AI tasks, providing superior results with remarkable efficiency.

Flexible and Cost-Effective Deployment:

Run Gemma 2 2B efficiently on a wide range of hardware—from edge devices and laptops to robust cloud deployments with Vertex AI and Google Kubernetes Engine (GKE). To further enhance its speed, it is optimized with the NVIDIA TensorRT-LLM library and is available as an NVIDIA NIM. This optimization targets various deployments, including data centers, cloud, local workstations, PCs, and edge devices—using NVIDIA RTX, NVIDIA GeForce RTX GPUs, or NVIDIA Jetson modules for edge AI. Additionally, Gemma 2 2B seamlessly integrates with Keras, JAX, Hugging Face, NVIDIA NeMo, Ollama, Gemma.cpp, and soon MediaPipe for streamlined development.

Open and Accessible:

Gemma 2 2B is available under the commercially-friendly Gemma terms for research and commercial applications. It's even small enough to run on the free tier of T4 GPUs in Google Colab, making experimentation and development easier than ever.

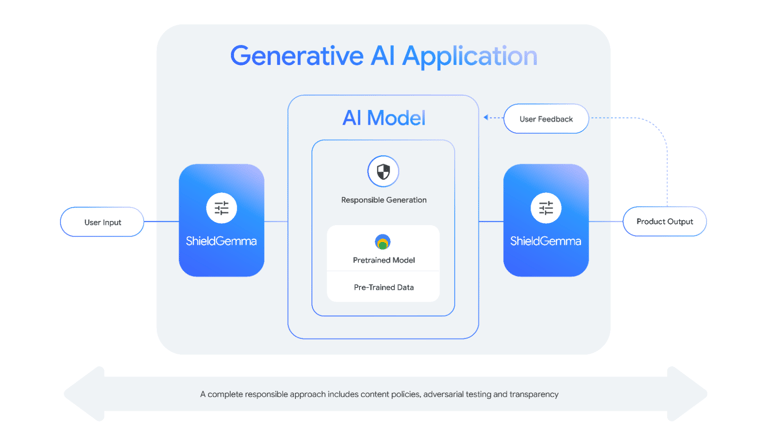

Deploying open models responsibly to ensure engaging, safe, and inclusive AI outputs requires significant effort from developers and researchers. To aid in this process, Google is introducing ShieldGemma, a series of state-of-the-art safety classifiers designed to detect and mitigate harmful content in AI model inputs and outputs. ShieldGemma specifically targets four key areas of harm:

Hate Speech

Harassment

Sexually Explicit Content

Dangerous Content

These open classifiers complement the existing suite of safety classifiers in Google's Responsible AI Toolkit. This toolkit includes a methodology for building classifiers tailored to specific policies with a limited number of datapoints, as well as existing Google Cloud off-the-shelf classifiers served via API.

Key Features of ShieldGemma

State-of-the-Art Performance: Built on top of Gemma 2, ShieldGemma represents the industry's leading safety classifiers, providing top-tier performance in detecting and mitigating harmful content.

Flexible Sizes: ShieldGemma offers various model sizes to meet diverse needs. The 2B model is ideal for online classification tasks, while the 9B and 27B versions provide higher performance for offline applications where latency is less of a concern. All sizes leverage NVIDIA speed optimizations for efficient performance across hardware.

Open and Collaborative: The open nature of ShieldGemma encourages transparency and collaboration within the AI community, contributing to the future of machine learning industry safety standards.

Performance and Impact

ShieldGemma’s evaluation results are based on optimal F1 (left) and AU-PRC (right) metrics, with higher scores indicating better performance. Using parameters 𝛼=0 and T=1 for probability calculations, ShieldGemma's prompt and response datasets, along with external benchmarks like OpenAI Mod and ToxicChat, demonstrate superior performance compared to baseline models.

Industry Endorsement

Rebecca Weiss, Executive Director of ML Commons, emphasized the importance of industry-wide investment in developing high-performance safety evaluators: "As AI continues to mature, the entire industry will need to invest in developing high-performance safety evaluators. We're glad to see Google making this investment and look forward to their continued involvement in our AI Safety Working Group."

Model Architecture of Google Gemma

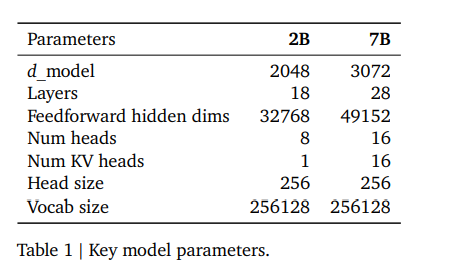

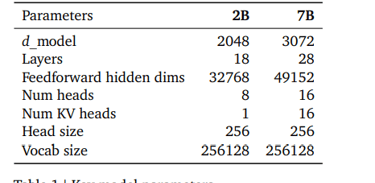

The Gemma model architecture is rooted in the transformer decoder framework (Vaswani et al., 2017). The core parameters of the architecture are detailed in Table 1. The models are trained on a context length of 8192 tokens and incorporate several enhancements proposed post the original transformer paper, as outlined below:

Key Improvements:

Multi-Query Attention (Shazeer, 2019):

The 2B model employs multi-query attention with a single key-value head (num_kv_heads = 1), which has proven effective at smaller scales. In contrast, the 7B model utilizes multi-head attention.

RoPE Embeddings (Su et al., 2021):

Instead of absolute positional embeddings, Gemma uses rotary positional embeddings in each layer. These embeddings are shared across inputs and outputs to reduce model size.

GeGLU Activations (Shazeer, 2020):

The standard ReLU non-linearity is replaced by the approximated GeGLU activation function, enhancing model performance.

RMSNorm (Zhang and Sennrich, 2019):

RMSNorm is used to normalize the input of each transformer sub-layer, including the attention and feedforward layers, stabilizing the training process.

Training Infrastructure

The training of Gemma models utilizes TPUv5e deployed in pods of 256 chips, arranged in a 16 x 16 2D torus configuration.

Gemma 2B: Trained across 2 pods, totaling 512 TPUv5e, with 256-way data replication within a pod.

Gemma 7B: Trained across 16 pods, totaling 4096 TPUv5e, utilizing 16-way model sharding and 16-way data replication within a pod. The optimizer state is sharded using techniques akin to ZeRO-3.

Beyond a pod, data-replica reduction is performed over the data-center network using the Pathways approach (Barham et al., 2022). The entire training run is orchestrated by a single Python process leveraging the ‘single controller’ paradigm of Jax (Roberts et al., 2023) and the GSPMD partitioner (Xu et al., 2021), and computed using the MegaScale XLA compiler (XLA, 2019).

Training Data Google Gemma 2B and 7B models are trained on datasets comprising 3 trillion and 6 trillion tokens, respectively. The data primarily consists of English content sourced from web documents, mathematical texts, and code repositories. Unlike the multimodal capabilities of the Gemini models, Gemma 2B and 7B are not designed for state-of-the-art performance in multilingual tasks. Instead, they focus on excelling in specific English-based applications.

A subset of the SentencePiece tokenizer (Kudo and Richardson, 2018) used for Gemini models ensures compatibility. This tokenizer splits digits, retains extra whitespace, and employs byte-level encodings for unknown tokens, following methodologies established by Chowdhery et al. (2022) and the Gemini Team (2023). The vocabulary size for these models is 256,000 tokens.

Filtering The pretraining dataset undergoes rigorous filtering to mitigate the risk of generating unwanted or unsafe outputs. This includes the removal of personal information and sensitive data using both heuristic and model-based classifiers. Additionally, evaluation sets are filtered out from the pretraining data mixture to prevent data leakage and reduce the risk of the model reciting sensitive outputs. A series of ablations on both the 2B and 7B models helped determine the final data mixture, ensuring a balance between data quality and model performance.

Instruction Tuning

Supervised Fine-Tuning (SFT) Gemma 2B and 7B models are fine-tuned using supervised fine-tuning (SFT) on a mix of text-only, English-only synthetic and human-generated prompt-response pairs. This process is enhanced by reinforcement learning from human feedback (RLHF), with a reward model trained on labeled English-only preference data. The policy is based on a set of high-quality prompts, and both SFT and RLHF are crucial for improving downstream automatic evaluations and human preference evaluations of model outputs.

Data Selection and Evaluation For supervised fine-tuning, data mixtures are selected based on LM-based side-by-side evaluations (Zheng et al., 2023). Responses generated by the test model are compared with those from a baseline model, shuffled randomly, and evaluated by a larger, high-capability model. Different prompt sets are created to highlight specific capabilities, such as instruction following, factual accuracy, creativity, and safety. LM-based judges use known strategies like chain-of-thought prompting (Wei et al., 2022), rubrics, and constitutions (Bai et al., 2022) to align with human preferences.

Filtering Synthetic data undergoes multiple stages of filtering to remove personal information, unsafe or toxic outputs, incorrect self-identifications, and duplicates. Following the Gemini approach, subsets of data that encourage better in-context attribution, hedging, and refusals are included to minimize hallucinations and improve performance on factuality metrics without compromising overall model performance.

Formatting Instruction-tuned models are trained with a specific formatter that annotates all examples with additional information during training and inference. This formatter serves two purposes: indicating roles in a conversation (e.g., User role) and delineating turns in multi-turn conversations. Special control tokens reserved in the tokenizer facilitate this process, ensuring coherent generations even in complex dialogue scenarios.

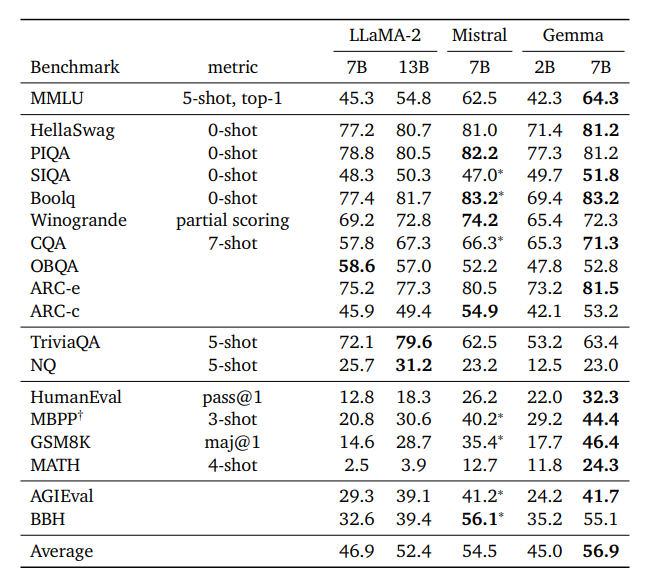

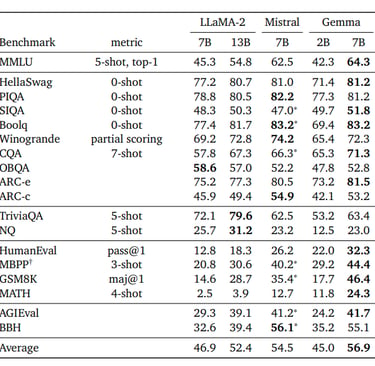

On the MMLU benchmark (Hendrycks et al., 2020), Gemma 7B outperforms all open-source alternatives of the same or smaller scale. It also surpasses several larger models, including LLaMA2 13B. However, human expert performance is gauged at 89.8% by the benchmark authors, and Gemini Ultra is the first model to exceed this threshold, highlighting the potential for further improvements to achieve Gemini and human-level performance.

Gemma models show particularly strong performance on mathematics and coding benchmarks. These tasks are often used to evaluate the general analytical capabilities of models. Gemma models' superior performance in these areas underscores their robust analytical prowess.

Gemma Scope provides unprecedented transparency into the decision-making processes of Gemma 2 models. Using sparse autoencoders (SAEs), it makes the inner workings of the models more interpretable. Key features include:

Open SAEs: Over 400 SAEs covering all layers of Gemma 2 2B and 9B models.

Interactive Demos: Explore SAE features and model behavior on Neuronpedia without writing code.

Easy-to-Use Repository: Access code and examples for interfacing with SAEs and Gemma 2 models.

Gemma Scope empowers researchers to build more understandable, accountable, and reliable AI systems by providing deeper insights into AI decision-making.

Google Gemma 2 represents a significant step forward in the realm of AI. By prioritizing safety, transparency, and efficiency, Google has created a model that is not only powerful but also responsible. As AI continues to evolve, innovations like Gemma 2 will play a crucial role in shaping a future where technology serves humanity ethically and effectively.

Resources For Google Gemma2:

https://huggingface.co/google/gemma-2-2b

https://storage.googleapis.com/deepmind-media/gemma/gemma-report.pdf